Image classification of laundry with 99.5% accuracy.

In spring 2022, I took CS230: Deep Learning at Stanford. This was my first AI project fully traversing training, validation, and testing. Our goal was attaining the highest possible classification accuracy by applying many different machine learning techniques.

Searching for Best Training#

| Technique | Baseline model | Test-set F1 score improvement over baseline |

|---|---|---|

| Training from random initialization (no ImageNet pre-training) | ResNet50 | -28.7% |

| Data augmentation: ≤20% width/height translation, horizontal flip, ≤20% rotation/zoom | VGG16 | +4.0% |

| Data augmentation: 2.5X more data | VGG16, ResNet50 | +15.3%, +9.2% |

| Regularization search for L2 regularization and dropout | VGG16 | -1.9% |

Switching architecture from VGG16 to ResNet50 | VGG16 | +5.0% |

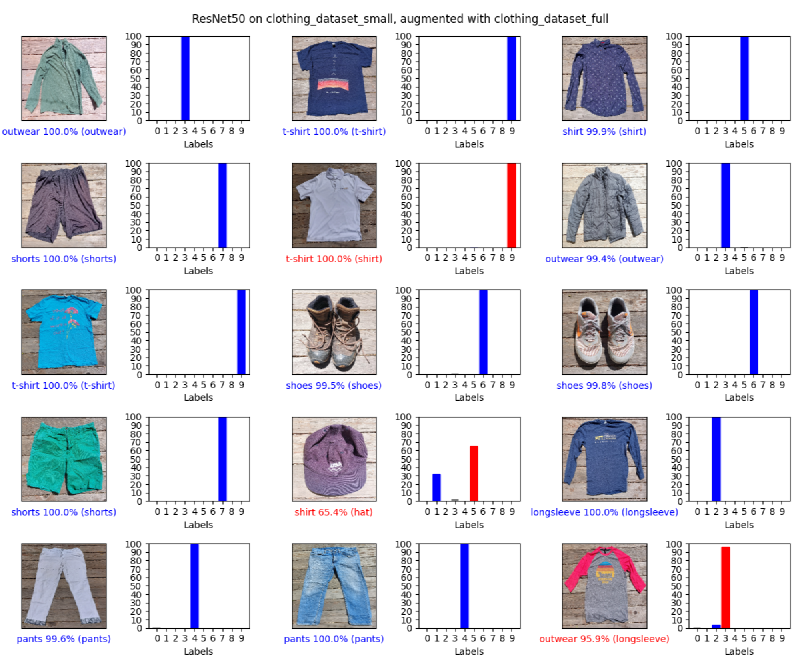

Visualizing Performance: Home Dataset#

To gain insight into the performance of full-stack training, we:

- Combined two Kaggle datasets’ training subsets

and trained a

ResNet50from random initialization on this.- Datasets are clothing dataset small and Clothing dataset (full, high resolution)

- Created a dataset of my own personal clothing items, called the home dataset (matching the classes). This dataset was used as an evaluation set for unseen data.

The end result is shown in the below figure.

ResNet50 trained from random initialization

being evaluated on the home dataset.

Red text means incorrect prediction,

parenthesis-enclosed text is the actual class.Findings#

- Semantically-blurry classes (e.g. long sleeve vs. outerwear) held back our accuracy

- A

VGG16pre-trained on ImageNet, then trained on our 2.5X larger dataset, yielded the best test-set accuracy of 99.5% - Our model didn’t generalize to unseen classes in a few-shot learning scenario (test-set F1 score was 35.9%), so there was room for future improvement