I began my graduate AI coursework in Autumn 2021 with Stanford’s CS221: Artificial Intelligence: Principles and Techniques. This was my first AI class, and for the class project I chose to reproduce the findings of the Point Completion Network (PCN) paper from Carnegie Mellon University.

For my dataset, I used Stanford’s completion3D dataset, as this was cited by most major shape reconstruction papers at the time.

Baselining Distance Metrics#

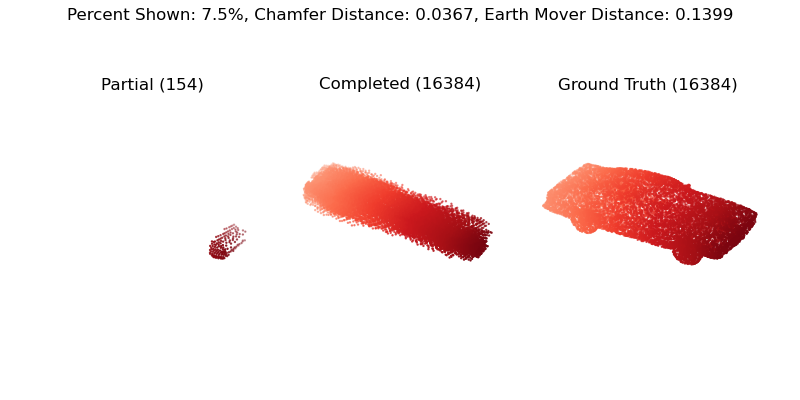

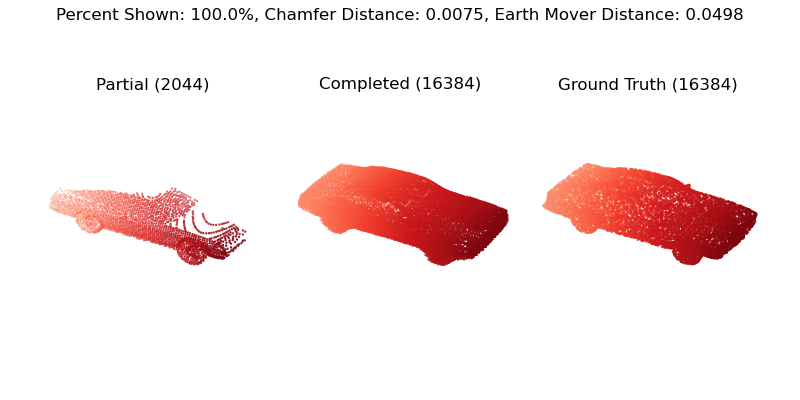

A first experiment I ran was feeding in different percentages of the input partial point cloud (shown left below), and seeing how distant the reconstructed point cloud was from the ground truth point cloud.

Other Findings#

- Reliable reconstruction required at least 10% of the original object to be present

- Point cloud distance metrics Chamfer Distance and Earth Mover’s Distance are not affected by number of points, as long as distribution is similar

- PCN has a fundamental limitation that leads to minute details of the ground truth cloud not showing in the reconstruction